HADOOP-PR000007 Exam Questions & Answers

Exam Code: HADOOP-PR000007

Exam Name: Hortonworks Certified Apache Hadoop 2.0 Developer (Pig and Hive Developer)

Updated: Nov 13, 2024

Q&As: 108

At Passcerty.com, we pride ourselves on the comprehensive nature of our HADOOP-PR000007 exam dumps, designed meticulously to encompass all key topics and nuances you might encounter during the real examination. Regular updates are a cornerstone of our service, ensuring that our dedicated users always have their hands on the most recent and relevant Q&A dumps. Behind every meticulously curated question and answer lies the hard work of our seasoned team of experts, who bring years of experience and knowledge into crafting these premium materials. And while we are invested in offering top-notch content, we also believe in empowering our community. As a token of our commitment to your success, we're delighted to offer a substantial portion of our resources for free practice. We invite you to make the most of the following content, and wish you every success in your endeavors.

Download Free Hortonworks HADOOP-PR000007 Demo

Experience Passcerty.com exam material in PDF version.

Simply submit your e-mail address below to get started with our PDF real exam demo of your Hortonworks HADOOP-PR000007 exam.

![]() Instant download

Instant download

![]() Latest update demo according to real exam

Latest update demo according to real exam

* Our demo shows only a few questions from your selected exam for evaluating purposes

Free Hortonworks HADOOP-PR000007 Dumps

Practice These Free Questions and Answers to Pass the HCAHD Exam

What is the disadvantage of using multiple reducers with the default HashPartitioner and distributing your workload across you cluster?

A. You will not be able to compress the intermediate data.

B. You will longer be able to take advantage of a Combiner.

C. By using multiple reducers with the default HashPartitioner, output files may not be in globally sorted order.

D. There are no concerns with this approach. It is always advisable to use multiple reduces.

You are developing a MapReduce job for sales reporting. The mapper will process input keys representing the year (IntWritable) and input values representing product indentifies (Text).

Indentify what determines the data types used by the Mapper for a given job.

A. The key and value types specified in the JobConf.setMapInputKeyClass and JobConf.setMapInputValuesClass methods

B. The data types specified in HADOOP_MAP_DATATYPES environment variable

C. The mapper-specification.xml file submitted with the job determine the mapper's input key and value types.

D. The InputFormat used by the job determines the mapper's input key and value types.

You need to create a job that does frequency analysis on input data. You will do this by writing a Mapper that uses TextInputFormat and splits each value (a line of text from an input file) into individual characters. For each one of these characters, you will emit the character as a key and an InputWritable as the value. As this will produce proportionally more intermediate data than input data, which two resources should you expect to be bottlenecks?

A. Processor and network I/O

B. Disk I/O and network I/O

C. Processor and RAM

D. Processor and disk I/O

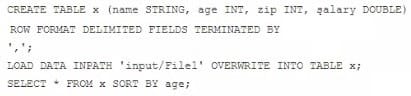

Examine the following Hive statements:

Assuming the statements above execute successfully, which one of the following statements is true?

A. Each reducer generates a file sorted by age

B. The SORT BY command causes only one reducer to be used

C. The output of each reducer is only the age column

D. The output is guaranteed to be a single file with all the data sorted by age

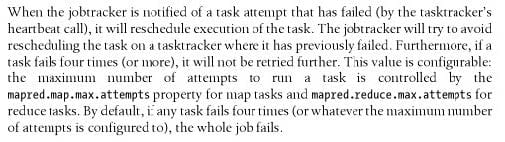

You wrote a map function that throws a runtime exception when it encounters a control character in input data. The input supplied to your mapper contains twelve such characters totals, spread across five file splits. The first four file splits each have two control characters and the last split has four control characters.

Indentify the number of failed task attempts you can expect when you run the job with mapred.max.map.attempts set to 4:

A. You will have forty-eight failed task attempts

B. You will have seventeen failed task attempts

C. You will have five failed task attempts

D. You will have twelve failed task attempts

E. You will have twenty failed task attempts

Viewing Page 1 of 3 pages. Download PDF or Software version with 108 questions